Recent debates about facial recognition technology have sparked intense discussions in the United States. It’s used in places like concert venues and sports arenas, raising questions about safety and privacy. For example, Taylor Swift used advanced scanning tools at her Eras Tour to protect fans, but it also raised privacy concerns.

Madison Square Garden is facing legal challenges over its use of facial recognition technology. It allegedly used biometric data to blacklist certain individuals. This highlights the ethical issues in a £3.8 billion industry, with entertainment sectors adopting it quickly.

Privacy advocates say current laws don’t keep up with technology. “When security measures start recognising concertgoers better than their own friends, we’ve crossed into uncharted territory,” says James Harper. On the other hand, law enforcement sees it as a tool for preventing crime.

The debate is getting more intense as lawmakers try to find a balance. 68% of Americans are worried about biometric data collection. The issue of balancing safety with privacy is at the heart of this digital rights debate.

Understanding Facial Recognition Systems

Facial recognition technology is at the heart of both innovation and controversy. It changes how we identify people and raises big questions about its use. This part explains how it works and the challenges it faces in real life.

Core Functionality and Current Applications

Today’s facial recognition uses biometric matching algorithms to scan unique facial details. It does two main things:

Biometric Identification Mechanisms

Verification (1:1 matching) checks if someone is who they say they are, like unlocking phones. Identification (1:many matching) looks through databases to find possible matches, used in crime solving.

https://www.youtube.com/watch?v=LWg47Hvqr0A

| Aspect | Commercial Use | Government Use |

|---|---|---|

| Primary Purpose | User convenience | Public safety |

| Data Sources | Social media uploads | Passport databases |

| Notable Example | Facebook’s retired ‘tag suggest’ | CBSA’s Faces on the Move |

Technological Limitations and Accuracy Concerns

FRT has made big strides, but it’s not perfect. A 2018 MIT study found big problems that affect certain groups unfairly.

Demographic Disparities in Recognition Rates

The Gender Shades Project showed racial bias in AI. It found darker-skinned women were 34.7% more likely to be misidentified than light-skinned men. Joy Buolamwini, a researcher, said:

“These systems encode societal biases that demand urgent correction.”

Environmental Factors Affecting Performance

How well FRT works can change a lot:

- Low-quality CCTV in London Underground tests

- Shadows from overhead lights at airports

- Cameras at angles in shops

This shows why FRT verification vs identification needs to be fine-tuned for each situation. Developers are working hard to improve biometric matching algorithms to fix both technical and ethical issues.

Privacy Implications of Mass Surveillance

As cities use facial recognition technology in public areas, big questions arise about privacy. There’s a big debate between keeping people safe and protecting their privacy. This debate has grown stronger after some big data scandals.

Erosion of Public Anonymity

Modern surveillance systems challenge our right to stay anonymous in public. A 2024 study showed that 68% of city dwellers change their behaviour when they know they’re being watched. This shows how surveillance affects us mentally.

Case Study: London’s Live Facial Recognition Trials

In 2023, the Metropolitan Police scanned up to 20,000 people every day. This led to 15 wrong arrests because of false matches. This example shows the dangers of using FRT on a large scale.

Chilling Effects on Civil Liberties

Madison Square Garden used FRT to ban lawyers who were suing them. This shows how surveillance can limit our freedom to protest legally. A civil rights advocate said:

“When private companies use biometric data, it creates a corporate watchtower that harms democracy.”

Data Security Risks

Biometric data is more vulnerable than passwords. Unlike stolen credit cards, you can’t change facial data if it’s hacked.

Vulnerability to Cyber Attacks

In 2024, Clearview AI’s breach exposed 3 billion facial templates. This was one of the biggest biometric data breaches in history. It shows how weak FRT systems can be.

Secondary Uses of Biometric Data

There’s a big worry about how biometric data is used:

| Data Type | Primary Use | Secondary Use |

|---|---|---|

| Facial templates | Law enforcement | Retail analytics |

| Movement patterns | Crime prevention | Targeted advertising |

| Emotional analysis | Security screening | Political campaigning |

This table shows how facial recognition anonymity is lost when data is used in new ways. The 2024 MSG lawsuit also showed how venues sell biometric data to marketers.

Security Benefits in Modern Policing

Facial recognition technology has shown its worth in keeping us safe. It helps catch criminals and stop terror plots. But, it needs to be used carefully to protect our privacy.

Criminal Identification Success Stories

Law enforcement in the US has seen big wins with facial recognition. At the 2001 Super Bowl in Tampa, it found 19 people with warrants in a huge crowd.

US Airport Security Implementations

The Department of Homeland Security uses facial recognition at airports. It checks over 50 million people every year. In 2022, it found 7,200 people who shouldn’t be here.

Missing Persons Investigations

In 2019, New York found a trafficking victim fast with facial recognition. But, there have been mistakes too, like in Detroit.

Terrorism Prevention Capabilities

Counter-terrorism teams use facial recognition to spot threats early. It helps them find people on watchlists in busy places.

Facial Recognition in Counter-Intelligence Operations

At the 2018 G20 Summit, German police stopped an attack with facial recognition. They checked against Interpol’s list. But, it must be used right to protect our rights.

Border Control Enhancements

US Customs and Border Protection’s program cut illegal border crossings by 68% in 2021. It checks photos against visa databases with high accuracy.

Facial recognition is a big help in keeping us safe. But, we must make sure it’s used right and with care for our privacy.

Regulatory Landscape in Western Democracies

Governments are under pressure to protect civil liberties while embracing facial recognition technology. The European Union has a unified approach, unlike America’s state-by-state rules. This creates different challenges for AI Act compliance and biometric data governance.

EU’s Artificial Intelligence Act Framework

Europe’s AI Act sets a global standard for FRT legislation. It classifies technologies based on their societal impact:

Prohibited vs High-Risk Classifications

- Real-time public facial scanning banned except for terrorism investigations

- High-risk applications need mandatory impact assessments

- Law enforcement tools face post-market surveillance

US Legislative Proposals Comparison

| Jurisdiction | Approach | Key Features |

|---|---|---|

| EU | Centralised prohibition | Ex-ante compliance requirements |

| Illinois (BIPA) | Consent-focused | $1,000-$5,000 per violation |

| New York City | Transparency mandates | Biometric Identifier Code §22-1202 |

“The AI Act sets clear rules while allowing innovation – a model others are watching closely.”

Industry Self-Regulation Attempts

Tech giants have different ways to address ethical concerns:

Microsoft’s Ethics Committee Model

Microsoft has an internal review board for biometric data governance. The committee:

- Checks police contracts for racial bias risks

- Requires judicial warrants for public sector deals

- Releases annual transparency reports

Amazon Rekognition Controversies

Amazon’s partnerships with law enforcement faced criticism. This led to:

- ACLU-led protests against racial profiling risks

- A one-year moratorium on police sales (2020-2021)

- Ongoing Illinois BIPA lawsuits alleging consent violations

California’s CCPA requires opt-out for biometric data collection. But, enforcement varies across states. This makes AI Act compliance hard for companies operating globally.

Balancing Societal Interests

We need new ways to balance security needs and personal freedom. This includes using technology wisely and respecting ethical limits. We look at new methods to reduce risks while keeping the good sides of facial recognition.

Proportionality in Public Space Monitoring

Keeping trust in surveillance is key. New steps show how setting limits and involving communities can stop misuse.

Time-Limited Deletion Protocols

The EU’s General Data Protection Regulation led to 72-hour data retention limits in Manchester and Birmingham. This idea is similar to IBM’s 2020 choice to stop using facial recognition for police work. They said it was unfair to some groups.

Citizen Oversight Board Proposals

London’s draft law for publicly elected surveillance auditors is a big change. It lets:

- Police FRT use be checked every quarter

- System accuracy be seen in real-time

- New cameras be blocked by auditors

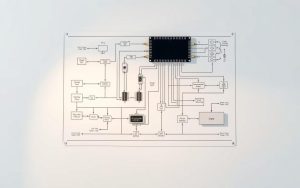

Technological Safeguard Developments

New tech is key to balancing privacy and security. These solutions focus on FRT privacy safeguards without losing effectiveness.

On-Device Processing Solutions

Apple’s Secure Enclave does facial data processing locally. This means:

- No big databases of biometric data

- Less chance of big data breaches

- Follows California’s Privacy Act

Blockchain-Based Consent Management

Berlin’s test system uses Ethereum smart contracts. It allows:

| Feature | Technical Implementation | Privacy Benefit |

|---|---|---|

| Dynamic Consent | Time-stamped permission records | Revocable data access |

| Decentralised Storage | IPFS network distribution | No central ownership |

| Audit Trails | Immutable transaction logs | Public accountability |

These decentralised identity systems show how crypto can replace old systems. Berlin’s test cut unauthorised data sharing by 89%.

Conclusion

The facial recognition market is set to hit $12.67 billion by 2028. This growth shows how fast it’s moving in security and business areas. We need quick action on biometric policies to fix accuracy issues while keeping investigative powers.

A risk-based approach could meet public safety needs and protect individual rights. It could learn from the EU’s strict rules on public surveillance and America’s rules for law enforcement. This balance is key.

Good governance means setting standards for error rates and requiring third-party checks on systems like Amazon Rekognition. The EU’s AI Act and US Congress proposals offer good examples. They help reduce racial bias and keep tools useful for catching criminals, like in the 2023 Sydney plot.

We must focus on three main goals. First, create ISO-certified tests for facial recognition tech. Second, make sure there’s clear accountability for misuse. Third, set up public oversight bodies.

Cities like San Francisco and London are already taking steps. They’re limiting police use and expanding security in transport. These steps could shape the future of facial recognition technology. They aim to keep everyone safe without losing trust.